Daily Progress: Security code easy hacking for UVa student

Thursday, February 28th, 2008The Daily Progress has an article about Karsten Nohl’s work on analyzing RFID tag security: Security code easy hacking for UVa student, 28 February 2008.

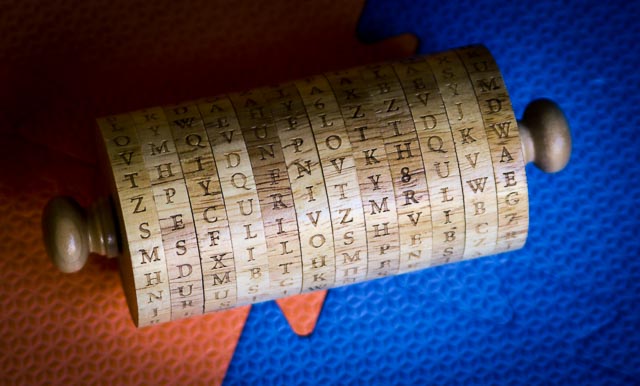

… Projects such as hacking the security code of a RFID chip is the “evil twin” of Nohl’s regular research, he said, which focuses on the development of cryptographic algorithms for computer security.

…

Nohl said that a more secure option for RFID security codes would be to rely on publicly known and time-tested security algorithms. NXP’s secret code, he said, is an example of “security by obscurity,” or the practice of keeping the code private and hoping hackers do not figure it out. Private algorithms, Nohl said, are more likely to have flaws and vulnerabilities.“We found significant vulnerabilities in their algorithm,” he said. “By keeping it secret, they hurt themselves in the end.”

[Added 1 March] The story also appears in The Danville Register (Hackers claim they broke key security code). Blog reports include PogoWasRight and LiquidMatrix Security Digest.

[Added 2 March]: More reports: Xenophilia, WAVY-TV.